Abstract

Human-robot interaction through mixed reality (MR) technologies enables novel, intuitive interfaces to control robots in remote operations. Such interfaces facilitate operations in hazardous environments, where human presence is risky, yet human oversight remains crucial. Potential environments include disaster response scenarios and areas with high radiation or toxic chemicals.

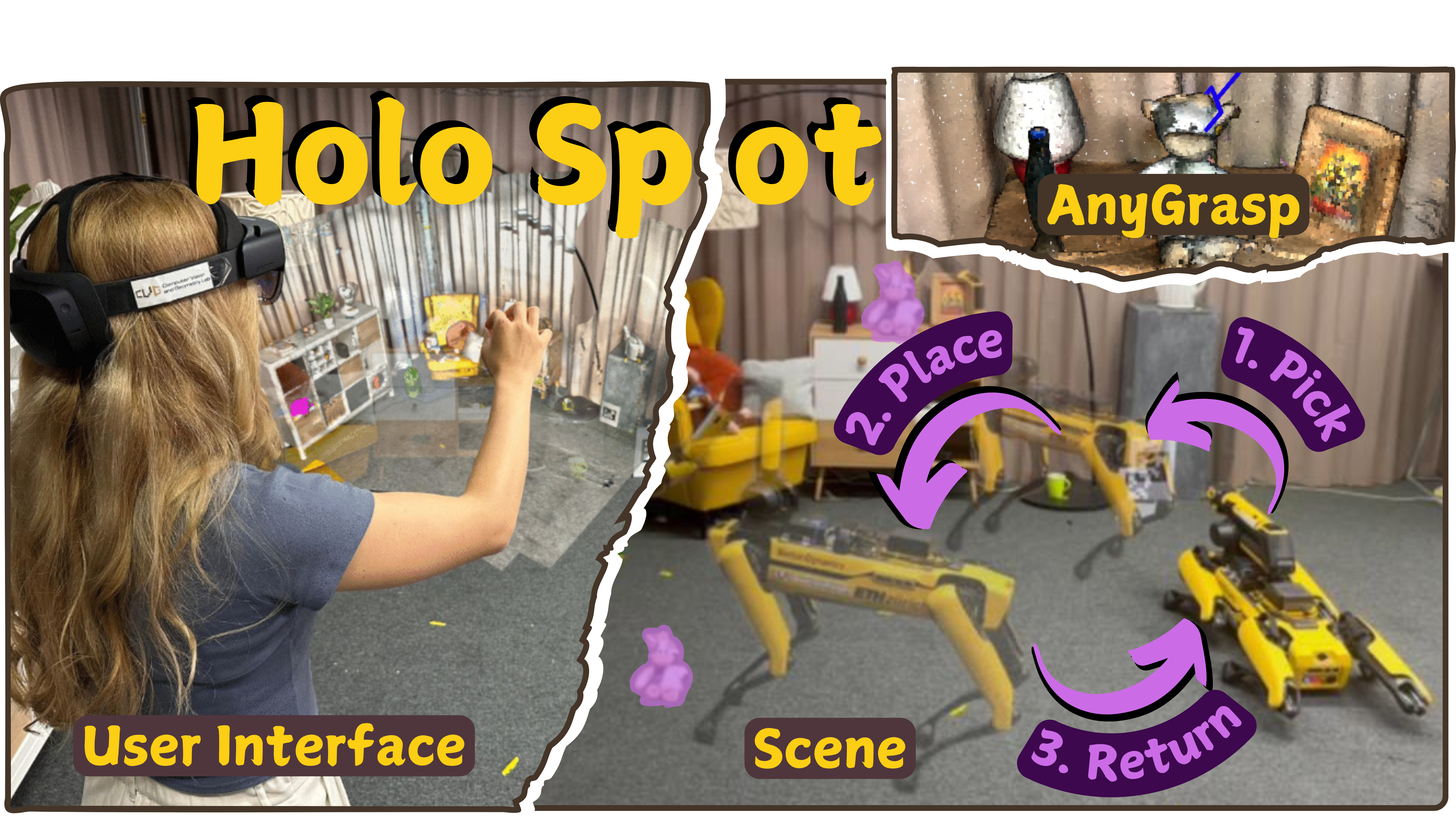

In this paper we present an interface system projecting a 3D representation of a scanned room as a scaled-down 'dollhouse' hologram, allowing users to select and manipulate objects using a straightforward drag-and-drop interface. We then translate these drag-and-drop user commands into real-time robot actions based on the recent spot-compose framework.

The Unity-based application provides an interactive tutorial and a user-friendly experience, ensuring ease of use. Through comprehensive end-to-end testing, we validate the system's capability in executing pick-and-place tasks and a complementary user study affirms the interface's intuitive controls. Our findings highlight the advantages of this interface in improving user experience and operational efficiency. This work lays the groundwork for a robust framework that advances the potential for seamless human-robot collaboration in diverse applications.

Video

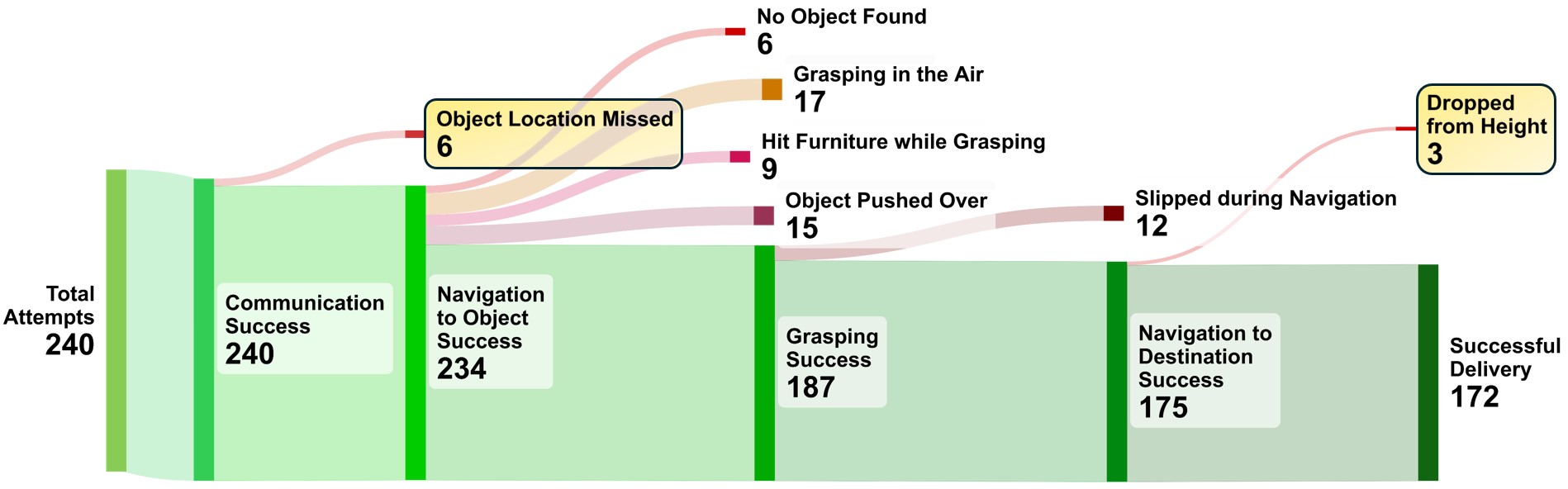

Pick-and-Place Results

We conducted 240 trials of the pick-and-place task using six distinct objects, differing in grasping difficulty and pick-up location. The overall success rate is 72%.

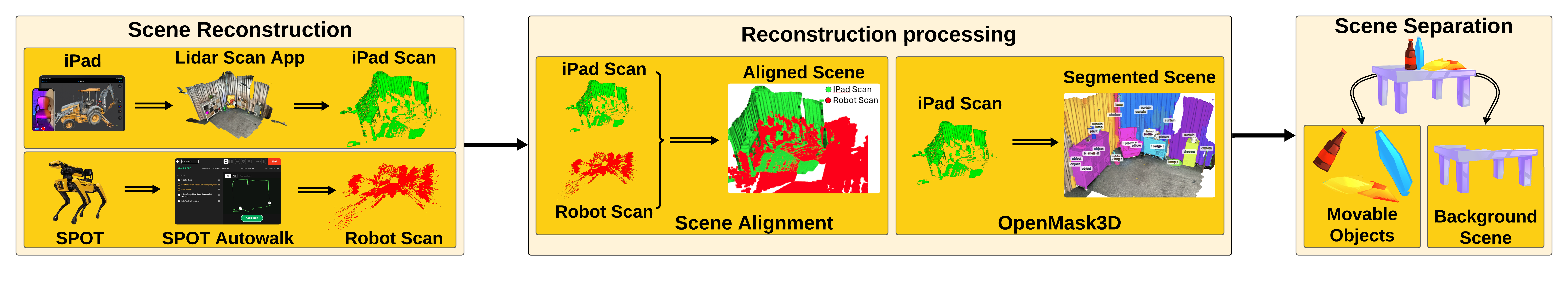

Offline Pipeline

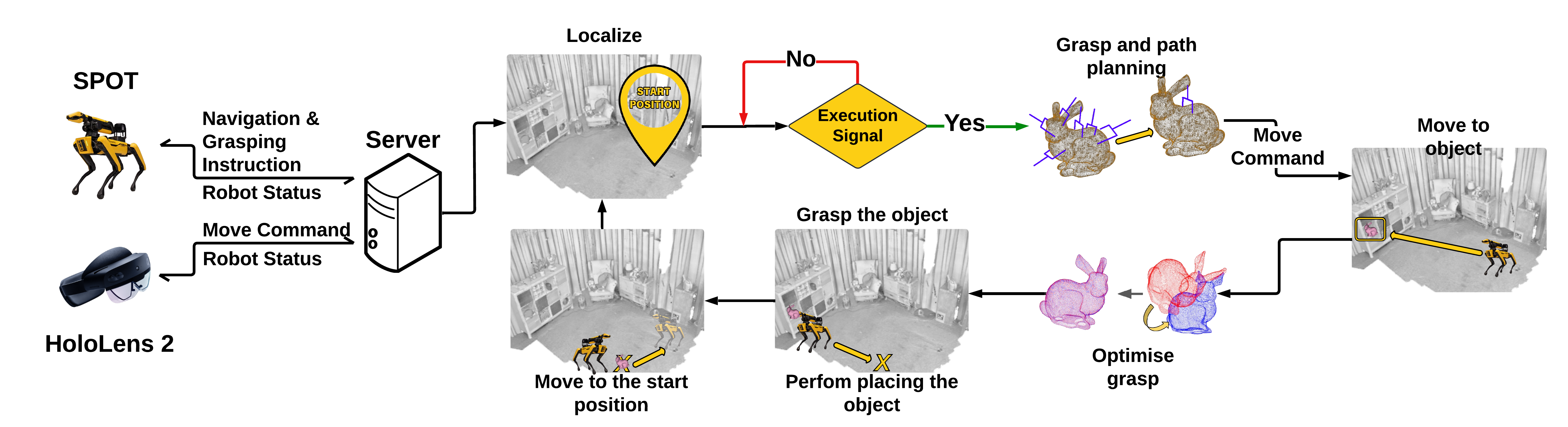

Online Pipeline

BibTeX

@misc{garcia2024holospotintuitiveobjectmanipulation,

title={HoloSpot: Intuitive Object Manipulation via Mixed Reality Drag-and-Drop},

author={Pablo Soler Garcia and Petar Lukovic and Lucie Reynaud and Andrea Sgobbi and Federica Bruni and Martin Brun and Marc Zünd and Riccardo Bollati and Marc Pollefeys and Hermann Blum and Zuria Bauer},

year={2024},

eprint={2410.11110},

archivePrefix={arXiv},

primaryClass={cs.RO},

url={https://arxiv.org/abs/2410.11110},

}